Faster Tech, Slower Systems

Why AI Demands a New Operating Model

We’ve been saying technology moves at ‘breakneck speed’ for decades. AI does feel different — but then again, so did the internet, cloud, and mobile. Each notch on the exponential curve feels seismic in the moment.

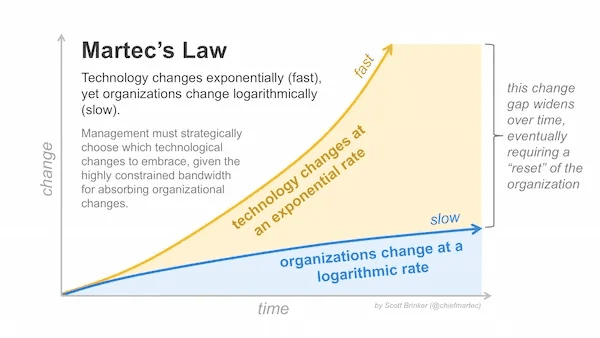

Scott Brinker phrased it well back in 2017, “Technology changes exponentially, but organizations change logarithmically.”

This holds true today, just in a new context. A context that feels different, but organizations have faced these same challenges over and over with an ever-increasing gap between the pace of technological change and the ability for organizations to evolve.

Scott Brinker, Martec’s Law (Source)

Something else Scott captured here is this idea that there is ultimately a reset that forces radical change. Resets are not inherently bad, but there’s a sense that organizations are facing a truly existential threat.

We’re seeing acorns falling, but are they soon-to-be meteors? I don’t know. No one knows.

I’m a designer and product person, but I love arm-charing decision science and complexity. It’s one of the reasons I love working on the problems we’re taking on at Dotwork; how complex organizations operate in the future. We think about how operating models keep up with the pace of change and weave in new technology that will drastically reshape the ways we work.

Complex systems often evolve to a tipping point, then shift rapidly in nonlinear ways — a behavior known as ‘Self-organized criticality’. It’s often illustrated by a sandpile: as you add grains of sand, the pile grows steadily until a single grain triggers a landslide. You can’t predict exactly when these avalanches will occur or how large they’ll be. They tend to follow a power-law distribution — many small events, and a few very large ones that restore the system’s balance.

Most of the time, organizations make small adjustments — incremental process improvements, localized tooling updates, team-level optimizations. But occasionally, an input (like a technological shift) triggers a phase transition that reshapes the entire org structure or business model.

Now, over two years into the modern AI era, it’s obvious we’re in the messy middle of a shift. The avalanches are all around us.

So to kick off this post, here’s the output of a thought experiment I had with AI that will either be comforting or anxiety-inducing, but I think it helps frame what we’ve seen talking to leaders from the Fortune 500 to mid-size companies:

Why change seems uneven and nonlinear: Some orgs appear frozen, others suddenly lurch forward. ‘self-organized criticality’ suggests they’re all evolving toward criticality, but the timing and size of their ‘avalanches’ (AI adoption, restructuring, reinvention) are unpredictable. The phenomena Scott Brinker outlined aligns with this.

Why old operating models break down: AI challenges long-standing assumptions — like fixed roles, hierarchical planning, or centralized expertise. These assumptions accumulate as latent stress in the system, and AI exposes that stress suddenly. Instead of challenging, unlearning, and redesigning, many organizations will treat AI as a service to the operating model— not as a strategic advantage to the operating model.

Why we need to seek long-term resilience over short-term optimization

When the sky seems like it’s falling, organizations seek stability. But from an ‘self-organized criticality’ view, there is no stable baseline — only a constant edge-of-chaos state. Radical change in inevitable. Resilience in an operating model is not about optimizing our way back to stability, it’s about absorbing that change towards a new equilibrium.

So what should we optimize for in the era of fast tech, slow orgs? I’ll give you my own ‘even over’ statements that shape how we build at Dotwork:

- Optimize for judgement even over efficiency

- Optimize for system design even over execution

- Optimize for context even over automation

Why? Because AI will make every organization more efficient. It will supercharge execution and improve automation across every organization. That’s a side-effect of an AI-driven operating model.

The real question is what AI won’t do. In the future of work, I believe we’ll make more informed decisions with AI-enhanced judgment. We’ll design systems that amplify the work we love — and delegate the work we don’t. And we’ll take on the harder, more human challenge: making sense of our environment, giving AI the deep context it needs to act in alignment with strategy as it evolves.

What we’re seeing

In the past year alone, we’ve seen generative AI go from novelty to necessity, with new capabilities emerging every month. Yet many organizations can’t keep up.

Planning cycles, decision processes, and hierarchies often feel stuck in a slower era.

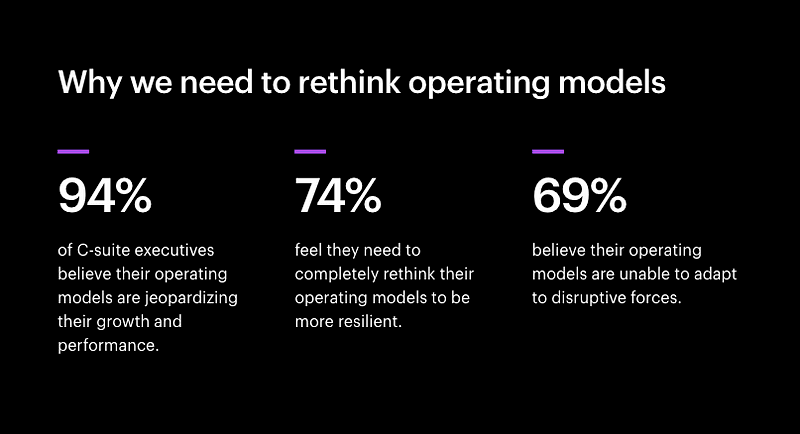

There’s a growing gap between what’s technologically possible and what companies can actually absorb. Recent research from Accenture backs this up: 94% of executives believe their current operating models are holding back growth, and 69% admit their models can’t adapt to disruptive forces. In other words, while tech sprints ahead, we're feeling pressure that the way we operate should be changing dramatically, but it isn't.

Accenture, Rethinking Operating Models (Source)

This disconnect isn’t for lack of awareness.

Most leadership teams see the tech headlines and feel the pressure. They know AI could revolutionize how they operate — making processes smarter, decisions faster, teams more effective. But knowing is one thing; doing is another.

The result is a kind of organizational whiplash: frontline teams are inundated with new tools and data, while legacy processes and cultures resist change.

It’s as if the organization’s nervous system is out of sync with its environment. Everyone senses the potential of moving faster and smarter, but the day-to-day reality is friction, bottlenecks, and what feels like missed opportunities.

Strain on Leaders and Teams on the Ground

What does this gap feel like on the ground? For leadership, it’s a mix of excitement and anxiety.

They champion innovation publicly, yet privately many worry their organization’s immune system will reject the change. Leaders are realizing that pouring money into AI experiments doesn’t automatically translate into value.

For teams and middle management, there’s often more frustration. They’re caught between new technology mandates and old ways of working. Employees find themselves spending more time navigating clunky interfaces and piecing together data than actually making decisions. At best, they’re able to use these new tools to make their daily work more efficient, but don’t want their efficiency gains mistaken for an opportunity to reduce headcount.

Every “digital transformation” initiative seems to add another tool without removing an old one. It’s no surprise that so many still rely on spreadsheets for strategic work. Legacy enterprise tools just haven’t been up to the task. When official systems can’t adapt, people default to the familiar (or the best of the worst), even if it’s manual.

This status quo breeds cynicism: teams see innovation theater from on high, but on the ground they’re still copy-pasting data between disconnected systems and sitting through meetings to reconcile conflicting reports.

The promise of AI-enabled productivity often feels very far from their daily reality.

The human cost here is real. Leadership pushes for speed and agility, but the org’s processes create drag. There’s a growing sense of “we could be doing so much more, if only we weren’t held back by our own system!”

Morale suffers when teams realize that competitors or startups can seemingly pivot in weeks while their initiatives wait months for approvals and alignment. This is the lived experience of the fast-tech/slow-org divide: a combination of exhilaration about what’s possible and frustration with what’s actually happening.

Why AI Isn’t a Plug-and-Play Solution

Amid this tension, many organizations attempt a quick fix: layer AI on top of existing processes and hope for a miracle.

Getting value at scale means rewiring parts of the enterprise, not just sticking an AI widget on top. Truly capturing AI’s benefits requires a fundamental rewiring of how a company operates — revisiting everything from processes and data architecture to roles and skills. AI can certainly automate tasks, but if those tasks live inside a calcified system, you’re likely optimizing (or creating workarounds for) a legacy operating model.

The upshot is that AI initiatives force a confrontation with the operating model. Organizations quickly discover that their old org charts, siloed departments, quarterly review cycles, and rigid IT systems are simply not geared for the fluid, real-time, probabilistic nature of AI-driven work.

You can’t get the agility and intelligence AI promises by papering over structural cracks. This is why the conversation is shifting from “What AI use case can we deploy?” to “How do we need to work differently in the AI era?”

Treating the Operating Model as a Continuously Evolving Product

Here’s the crux: to close the fast-tech/slow-org gap, companies must start treating their operating model as a product — a product that is never “finished” but continuously evolving.

In today’s environment, the way your organization works should be under constant development, just like your external products and services are. This is a mindset shift.

Traditionally, an “operating model” might be defined by a one-time consultant slide deck or a re-org memo that, once announced, stays static until the next crisis. Instead, we need to manage how we operate as a living, breathing, improvable thing.

What does it mean to treat the operating model as a product?

First, it means having an owner (or team) responsible for continuously improving the model — the same way a product manager iterates on a product or service.

Second, it implies versioning and experimentation. You pilot new ways of working on a small scale, gather feedback, and then scale up what works. In practice, that could look like experimenting with a new cross-functional team setup in one unit, or trialing an AI-assisted workflow for one process before rolling it out broadly.

Culturally, it requires embracing continuous change. Instead of big bang transformations every few years (with all the buzzwords that come with them), the organization is in a state of ongoing evolution.

This can sound unsettling, but in practice it’s energizing: it frees teams to adapt without waiting for permission from a years-in-the-making transformation program or hoping a tool can be a silver bullet.

The product mindset toward the operating model also emphasizes outcomes over theater. For example, don’t cling to a quarterly planning ritual if it’s outlived its usefulness — if a lighter, more continuous planning process yields better alignment, adopt it.

The point is not to change for change’s sake, but to relentlessly optimize how the organization functions, in service of its strategic goals. In a world where conditions change rapidly, this is the only sustainable way to operate.

The organizations that thrive will be those that can update how they work as easily as updating software — not via painful overhauls, but through a series of small, frequent improvements.

Treating the operating model as a product makes it a first-class asset: something to be designed, maintained, and evolved deliberately.

AI’s Real Role: Augmenting Awareness, Alignment, Decision Quality, and Information Gathering

When you shift your mindset in this way, the role of AI in the organization also comes into clearer focus. Rather than viewing AI primarily as an automation tool to cut costs or headcount, leading companies see it as a decision-making amplifier — a means to enhance human awareness, alignment, and judgment across flattening teams.

Yes, AI can automate routine tasks, but its bigger promise is making the organization smarter as a whole. It’s about augmenting human capability, not replacing it.

Awareness: In large organizations, important signals (a shift in customer behavior, a risk/escalation, a stale decision) often take ages to percolate to the right people. AI can change that. Leaders and teams can become aware of emerging information, problems, or opportunities far sooner, with clear context.

Alignment: One of the biggest challenges as companies scale is keeping everyone aligned to the current reality. Plans and budgets made last quarter often don’t reflect this quarter’s circumstances, yet many teams are still marching to outdated assumptions. AI can serve as an always-updating coordination mechanism. Intelligent systems can continuously reconcile data from different silos, so there’s a single source of truth about what’s going on. People can trust that when they make a decision, it’s based on the latest information, not last quarter’s snapshot.

Decision quality: Good decisions require both hard data and contextual understanding. AI can boost both, but beyond analytics, AI coupled with a rich semantic context can remind decision-makers of relevant context, challenge cognitive biases, flag conflicting objectives, and even act as a strategic sparring (through methods like Pre-mortems or the Six Thinking Hats). The aim is to raise the quality of decisions by making sure they’re informed by the full picture and by collective organizational learning — not just the loudest voice in the room or the narrow view of a single function.

Information Gathering: Everyone’s talking about all the new capabilities to extract useful information from unstructured data like Slack conversations, docs, and transcripts, but also, data entry sucks. Everyone hates status updates, key result check-ins, roadmaps for multiple audiences, and following up on dependencies.

This isn’t automation, it’s augmenting and streamlining human coordination. It’s a focus on building better environments where teams and leaders can do high-value work with high-context.

When your operating model is wired to take advantage of AI, you get an organization that’s more aware of itself and its environment, more aligned in its actions, and consistently making higher-quality decisions.

Those advantages compound over time into significantly better performance, efficiency and execution downstream. It’s the result of systematically weaving AI into the fabric of how you operate, rather than slapping it on top.

Laying the Groundwork: Semantic Context and Orchestration

We believe two technical pillars will become crucial: semantic context and orchestration.

Semantic context means having a shared, machine-readable map of the enterprise — often called an ontology — that defines how everything is related in our business (the people, the teams, the goals, the processes, the measures).

It’s a living knowledge graph that captures the meaning of our work. Think of it as the “nouns” of the organization. When an enterprise has this in place, AI and humans are speaking the same language.

It captures as much of the ‘reality’ of the organization as it can model.

In other words, a digital twin of the organization’s knowledge, constantly updated. This semantic layer is what allows AI to be context-aware. It’s the difference between an AI knowing a bunch of data points versus understanding how those data points connect across your business. With a robust ontology, an agent can reason because the relationships are explicitly modeled.

Orchestration refers to the ability to coordinate and connect the dots across multiple teams and systems.

As we introduce more AI into operations, we’ll have human decision-makers and AI agents working together. Orchestration ensures human and machine operate in harmony, and that requires a well-designed operating model.

We’re essentially talking about an operating system for the organization: one that not only knows the state of everything (through the ontology) but can also choreograph operations across humans and agents. When a disruptive event happens or a strategic change is made, the organization can respond as a unified whole, with AI agents and people acting with up-to-date strategic context.

As the foundation to an operating model in the age of AI, your organization needs a ‘central nervous system’ that connects front-line data to meaning (semantic context) and connects strategic intent to action. This is how you continuously align, adapt, and orchestrate at scale.

Following up with Part 2

We’ll explore these concepts of semantic context and orchestration in depth in Part 2. For now, it’s enough to recognize that they are the technological enablers of everything we’ve discussed in Part 1.

To be honest, the current environment can seem incredibly daunting, but it helps to remember the core principles we’ve outlined here: treat change as an unavoidable constant, treat your operating model as a product, and treat AI as an enabler of human potential.

In the next part, we’ll dive into how you can start building this foundation. And of course, Dotwork provides powerful scaffolding for this transition as an operating system purpose-built for the AI-era — we’d love to show you how!